2022. 5. 21. 15:59ㆍ🛠 Data Engineering/Apache Spark

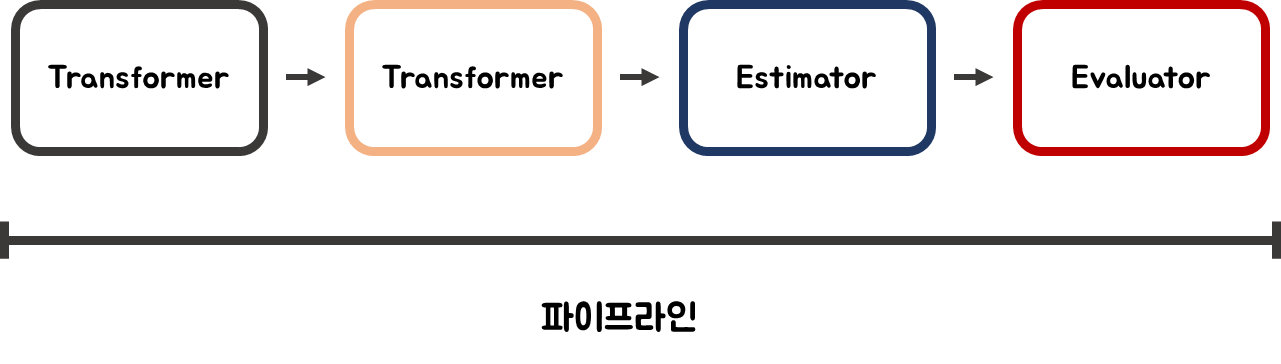

Spark MLlib은 Pipeline 컴포넌트를 지원한다.

Pipeline은 머신러닝의 워크플로우를 말하기도 하며, 여러 Stage를 담고 있다. persist() 함수를 통해 저장도 가능하다.

그렇다면 실제 코드는 어떻게 짤까?

이전 포스팅에서 다뤘던 택시 데이터로, MLlib Pipeline을 구축해보자.

기본 세팅은 이전 Spark 포스팅들을 보면 이해할 수 있다.

[데이터 https://mengu.tistory.com/50?category=932924]

[SparkSQL] 택시 데이터 다운/전처리/분석 feat. TLC

이전 포스팅에서 공부한 SparkSQL 지식을 바탕으로, 실제 Taxi 데이터를 전처리해보자. * 전처리란? 이상치 제거, 그룹화 등 데이터 분석이 용이하도록 데이터를 변형하는 과정을 말한다. TLC Trip Recor

mengu.tistory.com

"본 포스팅은 패스트캠퍼스의 강의를 듣고, 정리한 자료임을 밝힙니다."

Basic Settings

기본 세팅이다. 임포트 해줘야 할 것들은 미리 해놨고, SparkSession을 열어두었다.

# 폰트 설정

from matplotlib import font_manager, rc

font_path = 'C:\\WINDOWS\\Fonts\\HBATANG.TTF'

font = font_manager.FontProperties(fname=font_path).get_name()

rc('font', family=font)

# basic settings

import os

import findspark

findspark.init(os.environ.get("SPARK_HOME"))

import pyspark

from pyspark import SparkConf, SparkContext

import pandas as pd

import faulthandler

faulthandler.enable()

from pyspark.sql import SparkSession

MAX_MEMORY = "5g"

spark = SparkSession.builder.master('local').appName("taxi-fare-prediction")\

.config("spark.executor.memory", MAX_MEMORY)\

.config("spark.driver.memory", MAX_MEMORY).getOrCreate()

# 데이터가 있는 파일

zone_data = "C:/DE study/data-engineering/01-spark/data/taxi_zone_lookup.csv"

trip_files = "C:/DE study/data-engineering/01-spark/data/trips/*"

# 데이터 로드

trips_df = spark.read.csv(f"file:///{trip_files}", inferSchema = True, header = True)

zone_df = spark.read.csv(f"file:///{zone_data}", inferSchema = True, header = True)

# 데이터 스키마

trips_df.printSchema()

zone_df.printSchema()

# 데이터 createOrReplaceTempView()

trips_df.createOrReplaceTempView("trips")

zone_df.createOrReplaceTempView("zone")

root

|-- VendorID: integer (nullable = true)

|-- tpep_pickup_datetime: string (nullable = true)

|-- tpep_dropoff_datetime: string (nullable = true)

|-- passenger_count: integer (nullable = true)

|-- trip_distance: double (nullable = true)

|-- RatecodeID: integer (nullable = true)

|-- store_and_fwd_flag: string (nullable = true)

|-- PULocationID: integer (nullable = true)

|-- DOLocationID: integer (nullable = true)

|-- payment_type: integer (nullable = true)

|-- fare_amount: double (nullable = true)

|-- extra: double (nullable = true)

|-- mta_tax: double (nullable = true)

|-- tip_amount: double (nullable = true)

|-- tolls_amount: double (nullable = true)

|-- improvement_surcharge: double (nullable = true)

|-- total_amount: double (nullable = true)

|-- congestion_surcharge: double (nullable = true)

root

|-- LocationID: integer (nullable = true)

|-- Borough: string (nullable = true)

|-- Zone: string (nullable = true)

|-- service_zone: string (nullable = true)

원하는 DataFrame 가져오기

Fare 요금을 예측하기 위한 데이터들을 가져온다.

(1) 지불 방법

(2) 탑승 시간

(3) 탑승 장소

(4) 하차 장소

(5) 탑승 요일

(6) 손님 수

(7) 거리

(8) 요금

각 데이터들에 이상치와 결측치가 존재함으로, SQL을 통해 가져올 때 필터링을 해서 가져온다. 자세한 전처리 내용은 다음 포스팅에서 확인할 수 있다.

query = '''

SELECT

PULocationID as pickup_location_id,

DOLocationID as dropoff_location_id,

payment_type,

HOUR(tpep_pickup_datetime) as pickup_time,

DATE_FORMAT(TO_DATE(tpep_pickup_datetime), 'EEEE') AS day_of_week,

passenger_count,

trip_distance,

total_amount

FROM

trips

WHERE

total_amount < 5000

AND total_amount > 0

AND trip_distance > 0

AND trip_distance < 500

AND passenger_count < 4

AND TO_DATE(tpep_pickup_datetime) >= '2021-01-01'

AND TO_DATE(tpep_pickup_datetime) < '2021-08-01'

'''

# sql 문을 통해 데이터 프레임 가져오기

data_df = spark.sql(query)

data_df.createOrReplaceTempView('data')

data_df.printSchema()

root

|-- pickup_location_id: integer (nullable = true)

|-- dropoff_location_id: integer (nullable = true)

|-- payment_type: integer (nullable = true)

|-- pickup_time: integer (nullable = true)

|-- day_of_week: string (nullable = true)

|-- passenger_count: integer (nullable = true)

|-- trip_distance: double (nullable = true)

|-- total_amount: double (nullable = true)

Train, Test 데이터셋 나누기

# train, test dataframe 나누기

train_df, test_df = data_df.randomSplit([0.8, 0.2], seed=1)

# 나중의 로딩 시간을 아끼기 위해 미리 저장해둡니다.

data_dir = "C:/저장 경로"

train_df = spark.read.parquet(f'{data_dir}/train/')

test_df = spark.read.parquet(f'{data_dir}/test/')

Pipeline 구축

파이프라인을 구성하기에 앞서, 가장 중요한 것은 '어떻게 전처리'할지 짜는 것이다. 현재 데이터에서 머신러닝 학습을 위해 최소한으로 해줘야 하는 전처리로는 (1) 원-핫 인코딩 (2) 정규화 (3) 벡터화 정도이다. 이 전처리들을 확정 짓고 난 후에 파이프라인을 구성하도록 한다.

(1) 원-핫 인코딩

카테고리형 칼럼만 원-핫 인코딩을 해주면 된다.

- StringIndexer : 카테고리형을 원-핫 인코딩하기 전에, 숫자를 먼저 부여해준다. ex) (딸기, 바나나, 초코) -> (1, 3, 2)

- OneHotEncoder : 숫자 부여된 것을 벡터화한다. ex) 1 -> [1, 0, 0]

# 원-핫 인코딩

from pyspark.ml.feature import OneHotEncoder, StringIndexer

# 카테고리형 칼럼

cat_feats = [

'pickup_location_id',

'dropoff_location_id',

'day_of_week'

]

# 파이프라인 구성을 위한 stages 리스트

stages = []

# index를 바꾸고, 그 바뀐 indexer에 원-핫 인코딩을 적용해준다.

for c in cat_feats:

cat_indexer = StringIndexer(inputCol=c, outputCol = c + "_idx").setHandleInvalid("keep")

onehot_encoder = OneHotEncoder(inputCols = [cat_indexer.getOutputCol()], outputCols=[c + '_onehot'])

stages += [cat_indexer, onehot_encoder]

(2) 숫자형 데이터, 벡터화 및 스케일러 적용

숫자형 데이터를 하나의 벡터로 묶고, 스케일러를 적용한다.

# 벡터화 + 스케일러 적용

from pyspark.ml.feature import VectorAssembler, StandardScaler

# numeric형 칼럼

num_feats = [

'passenger_count',

'trip_distance',

'pickup_time'

]

# 벡터화 한 후, 스케일러를 적용한다.

for n in num_feats:

num_assembler = VectorAssembler(inputCols=[n], outputCol= n + '_vecotr')

num_scaler = StandardScaler(inputCol=num_assembler.getOutputCol(), outputCol = n + '_scaled')

stages += [num_assembler, num_scaler]

* 중간 stages 점검

stages

[StringIndexer_115f74e6efea,

OneHotEncoder_714f494271bb,

StringIndexer_806a3b8e8a32,

OneHotEncoder_f7bc9266f650,

StringIndexer_2f125ebb95a8,

OneHotEncoder_eb212d50e427,

VectorAssembler_5b7bfff3be42,

StandardScaler_3e59d49af9ad,

VectorAssembler_a61858dada0f,

StandardScaler_c739fc7f7d49,

VectorAssembler_f683b2eeb4d2,

StandardScaler_96e5ba925088]

(3) 모두 VectorAssemble 하여 훈련에 적합한 데이터 셋을 완성하도록 구축하자.

# inputs 칼럼

assembler_inputs = [c + '_onehot' for c in cat_feats] + [n + '_scaled' for n in num_feats]

print(assembler_inputs)

['pickup_location_id_onehot',

'dropoff_location_id_onehot',

'day_of_week_onehot',

'passenger_count_scaled',

'trip_distance_scaled',

'pickup_time_scaled']

# stages에 VectorAssemble 추가

assembler = VectorAssembler(inputCols=assembler_inputs, outputCol= 'feature_vector')

stages += [assembler]

stages

[StringIndexer_dfc09cc586be,

OneHotEncoder_987fbfa36a2d,

StringIndexer_bf2338365d7f,

OneHotEncoder_5a91ea5195e8,

StringIndexer_c416d64272f1,

OneHotEncoder_0dfab0742066,

VectorAssembler_4c5f47a3740c,

StandardScaler_65dfe2363318,

VectorAssembler_7e0a4e81ec39,

StandardScaler_22d11d283c0a,

VectorAssembler_c2b692153924,

StandardScaler_debc924ffa61,

VectorAssembler_c2c382815ebb]

(4) Final, Pipeline() 을 통해서 다 묶어주기

# pipeline 만들기

from pyspark.ml import Pipeline

transform_stages = stages

pipeline = Pipeline(stages = transform_stages)

fitted_transformer = pipeline.fit(train_df)

예측 & 추론

pipeline fit()를 해주고, 뒤이어 데이터들을 pipeline에 통과시켜 전처리해주자.

vtrain_df = fitted_transformer.transform(train_df)

vtest_df = fitted_transformer.transform(test_df)

선형 회귀 모델을 만들고, 훈련시키고 예측하기

lr = LinearRegression(

maxIter=50,

solver='normal',

labelCol='total_amount',

featuresCol='feature_vector'

)

# 훈련하기

model = lr.fit(vtrain_df)

# 추론하기

prediction = model.transform(vtest_df)

# 추론 결과 확인하기

prediction.select(['trip_distance', 'day_of_week','total_amount','prediction']).show(5)

+-------------+-----------+------------+------------------+

|trip_distance|day_of_week|total_amount| prediction|

+-------------+-----------+------------+------------------+

| 0.8| Thursday| 120.3| 89.980284493094|

| 24.94| Saturday| 70.8| 131.2247006891777|

| 0.01| Wednesday| 102.36|13.995815841451657|

| 0.1| Monday| 71.85|10.013635081914366|

| 0.5| Tuesday| 7.8| 12.046081421887|

+-------------+-----------+------------+------------------+

only showing top 5 rows

Evaluator

(1) RMSE

model.summary.rootMeanSquaredError

5.818945295076586

(2) R2

model.summary.r2

0.7997047915616821

이전 포스팅에 비해 성능이 살짝 향상된 것을 확인할 수 있다. 이번 포스팅에선 Spark로 머신러닝 구축하는 방법을 알아보았다. 훨씬 다양한 전처리 기구들이 존재하며, 다르게 조합한다면 분명 더 좋은 성능의 모델이 만들어질 수 있다. 그러니 스스로 이것저것 만져보길 바란다.

수고하셨습니다.

'🛠 Data Engineering > Apache Spark' 카테고리의 다른 글

| [SparkML] ALS, 추천 알고리즘 활용하기 (1) | 2022.05.23 |

|---|---|

| [SparkML] MLlib Parameter 튜닝 개념 및 코드 (0) | 2022.05.22 |

| [SparkML] MLlib 개념 및 실습 코드 (0) | 2022.05.20 |

| [SparkSQL] 택시 데이터 다운/전처리/분석 feat. TLC (0) | 2022.05.10 |

| [SparkSQL] Catalyst, Tungsten 작동 원리 (0) | 2022.05.09 |