2022. 4. 3. 14:27ㆍ🧪 Data Science/Paper review

오늘 리뷰할 paper는 2014년에 발표된 논문 'NEURAL MACHINE TRANSLATION BY JOINTLY LEARNING TO ALIGN AND TRANSLATE'이다. 이다. 기존의 Seq2Seq 방식에서 확장된 Attention 기법을 제안하여 많은 주목을 받았다. Attention 기법은 NLP 영역에서 필수로 알아야 하는 메커니즘이다.

[Source url: https://arxiv.org/abs/1409.0473 , Cornell University]

[자세한 Attention Mechanism 설명 : https://wikidocs.net/22893]

1. Summary

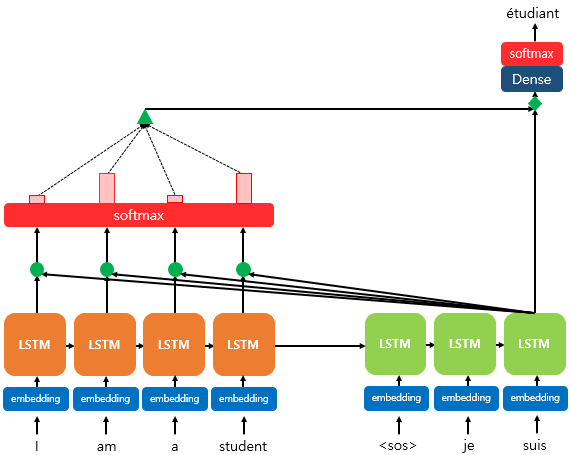

기존의 Seq2Seq 방식 훈련은 NLP 성능을 향상했다. 하지만 Context vector에 문장의 모든 정보를 압축시키는 방식은, 압축시키는 정보가 길어지면 길어질수록 병목 현상을 낳았다. 이에 따라 모델이 스스로(soft alignment) 연관성 있는 Sentence part를 찾아서 예측할 수 있도록 하는 방식을 제안한다.

저자는 자세한 Attention 기법 설명에 앞서, Seq2Seq 원리과 RNN 작동 방식 등을 짚고 넘어간다. 해당 논문에선 RNN 모델로 bidirectional RNN(양방향 RNN)을 활용한다. 보통의 RNN 알고리즘은 뒤의 요소를 참고할 수 없지만, 순방향/역방향 RNN을 붙여서 모두 참고할 수 있도록 개조한 구조이다.

[출처 : https://wikidocs.net/22893]

2. 공식 이해

본래 Encoder-Decoder 방식은 h (hiddent state)만을 고려한 c (Context vector) 를 활용했다.

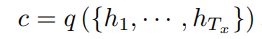

하지만 저자가 제시한 모델은 조금 다른 Context vector를 제시한다.

차근차근 살펴보자.

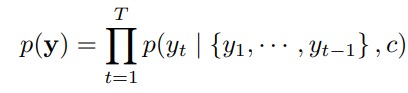

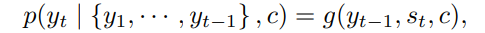

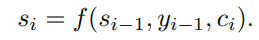

i 번째 단어를 예측할 때 쓰이는 히든 스테이트 s 와 Encoder의 j 번째 열 벡터 h가 얼마나 유사한지 나타내는 스칼라를 계산한다. a 는 alignment model 유사도를 잘 뽑아내는 함수면 어떤 것이든 가능하다.

* 소프트맥스 함수를 이용하여 확률 값으로 변경한다.

* Tx 값은 Encoder에 입력된 단어 수를 말한다.

이전에 계산한 유사도 스칼라 값이 들어간다.

i 번째 단어 예측할 때 정의되는 attention value 알파를 구한다.

attention value는 인코더의 열 벡터 h와 곱해져 Context vector를 도출한다.

이전에 구해진 Context vector는 i 번째 히든 스테이트를 구하는 영양제가 된다.

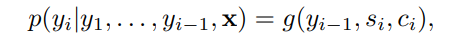

마지막, 이때까지 구한 요소들을 단어 예측에 사용한다.

3. Conclusion

실험 결과, 문장 길이가 길수록 Attention 기법은 빛을 냈다.

심지어 30 크기의 문장을 학습한 Attention model 이 50 크기의 문장을 학습한 model 보다 더 좋은 성능을 보였다.

[논문 Conclusion 주요문장 발췌]

We conjectured that the use of a fixed-length context vector is problematic for translating long sentences, based on a recent empirical study reported by Cho et al. (2014b) and Pouget-Abadie et al. (2014). In this paper, we proposed a novel architecture that addresses this issue. This lets the model focus only on information relevant to the generation of the next target word. The experiment revealed that the proposed RNNsearch outperforms the conventional encoder–decoder model (RNNencdec). We were able to conclude that the model can correctly align each target word with the relevant words. One of challenges left for the future is to better handle unknown, or rare words.

'🧪 Data Science > Paper review' 카테고리의 다른 글

| [논문리뷰] Sample-Efficient Multi-agent RL with Reset Replay (5) | 2024.11.11 |

|---|---|

| [Paper review] TabNet: Attentive Interpretable Tabular Learning 및 TabNet 실습 (0) | 2024.09.13 |

| [Paper review] Towards maximizing expected possession outcome in soccer (0) | 2024.07.03 |

| [논문 리뷰] 딥러닝으로 혐오 표현 감지하기 in Tweets (0) | 2022.04.06 |

| [논문 리뷰] CNN for Sentence Classification, 문장 분류를 CNN으로 시도하다. (0) | 2022.03.16 |