2024. 9. 13. 15:30ㆍ🧪 Data Science/Paper review

연구실 논문 세미나에서 들은 내용을 정리하고자 한다.

논문 제목은 ' TabNet: Attentive Interpretable Tabular Learning'이다.

Paper link:

https://ojs.aaai.org/index.php/AAAI/article/view/16826

TabNet: Attentive Interpretable Tabular Learning | Proceedings of the AAAI Conference on Artificial Intelligence

ojs.aaai.org

논문 간단 설명

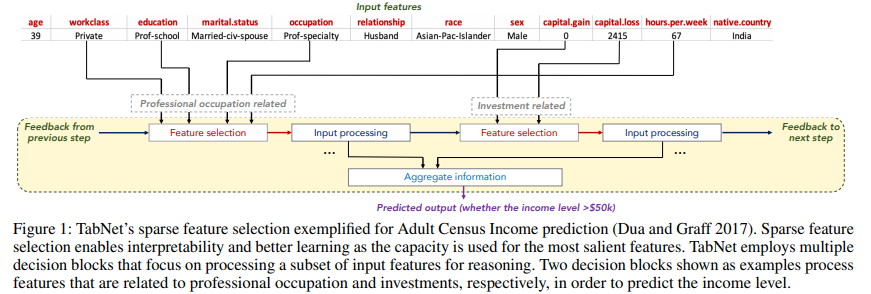

Tabular 데이터에서 주로 결정 트리(Decision Tree) 기반 모델들이 많이 사용된다. 하지만 트리 기반 모델은 표현력의 한계가 있으며, 딥러닝이 다양한 데이터 유형을 처리하는 능력에 비해 부족한 점이 존재한다. TabNet은 attention 메커니즘을 활용하여 각 입력에서 중요한 특징만 선택하는 방식으로 학습하여 이러한 한계를 극복하고자 한다.

1. Introduction

표 형식 데이터가 많이 분석되는데, 아직까지 DNNs 기법보다 DT(Decision Tree) 기법이 많이 사용된다. DT 방식이 잘 통하는 이유는 쉬운 해석과 빠른 학습 속도에 있다. 트리 구조를 따라가며 어떤 기준으로 데이터를 나눴는지 파악할 수 있다. 여러 개의 트리를 결합해 예측 결과의 분산을 줄이는 방식이 앙상블(Random Forests, XGBoost)이다.

* Tree-based learning: 각 단계에서 통계적 정보 이득이 가장 큰 특징을 효율적으로 선택.

다만, DT는 학습에 있어 여러 처리가 필요한 점, 다른 데이터와 함께 처리하는 것이 어려운 점에서 DNNs가 고급 기법에서 장점을 가진다.

2. Main Idea

2-0. TabNet overview

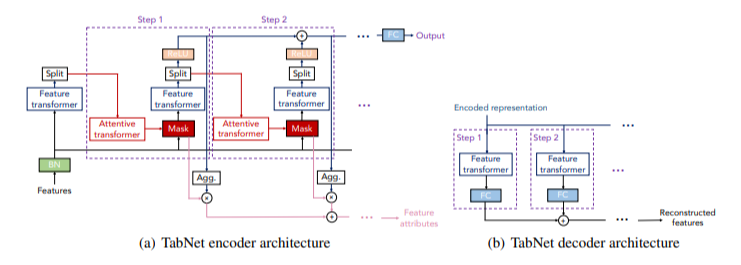

TabNet은 feature 설계를 통해 DNNs 기본 구성 요소를 사용하여 결정 트리와 유사한 출력 구조를 구현한다.

1) 각 데이터 인스턴스에 대해 중요한 특징들을 데이터로부터 학습 및 선택

2) 각 단계에서 선택된 특징에 따라 결정이 부분적으로 이뤄짐. 여러 단계의 결과가 결합되어 최종 결정

3) 선택된 특징들을 비선형적으로 처리 및 학습

4) 더 높은 차원과 다단계 구조를 통해 앙상블 방식과 유사한 효과

모델 과정: Feature transformer > Attentive transformer > Mask 처리

2-1. Feature selection

예측에 유용한 특징들을 부분 집합으로 선택하는 과정을 말한다. 주로 사용되는 방법이 Forward selection, Lasso regularization인데, 이들은 전체 학습 데이터를 기반으로 중요도를 평가(global methods)한다.

반면에, instance-wise feature selection은 각 instance마다 개별적으로 특징을 선택하는 방식이다.

TabNet은 soft feature selection을 사용하고, cotrollable sparsity(제어 가능한 희소성)으로 특징 선택과 출력 매핑을 end-to-end 방식으로 학습한다. 즉, 모델이 특징을 선택하고 출력하는 것을 동시에 처리한다.

Attentive transformer > sparsemax normalization을 사용해 마스크를 계산한다. 각 특징이 이전 단계에서 얼마나 사용되었는지를 나타내는 우선순위 스케일(prior scale) 값을 활용한다.

2-2. Feature transformer, Attentive transformer 구조

Feature transformer : 특징들을 변환하여 다음 단계에 전달하는 모듈

Attentive transformer : 각 결정 단계에서 중요한 특징을 선택

Feature Masking : 선택된 특징들을 기반으로 최종 출력 계산

2-3. Ghost Batch Normalization

배치 정규화 기법의 일종으로, 배치 정규화의 효과를 극대화하면서 메모리 사용을 효율적으로 관리하는 데 초점을 두고 있다.

이는 Hoffer, Hubara, and Soudry (2017)에서 제안된 기법이다.

DNNs에서 Batch Normalization은 배치 단위로 입력 데이터를 정규화하여 학습을 안정시키고, 더 빠른 학습 속도를 제공한다. 그러나 대규모 배치일 경우, 통계값(평균과 분산)의 계산이 너무 글로벌해져, 모델이 소규모 데이터에서 잘 일반화되지 않을 수 있다. 또한 BN 통계값이 지나치게 평활화되면 속도가 느려지고 너무 작은 배치를 사용하면 과적합이 발생한다.

Ghost BN은 큰 배치를 작은 가상 배치로 나누어 각 배치에서 통계값을 계산하여 로컬 통계를 반영한다. 일관된 학습 속도를 유지하도록 도와준다.

TabNet에서 Ghost BN은 입력 특징들에 대해서는 사용하지 않지만, 나머지 네트워크 층에서는 사용한다. 입력 특징에 대해서는 저분산 통계치가 도움이 되지만, 중간 과정에선 Ghost BN을 적용하여 학습을 안정화하고 학습 속도를 높이기 때문이다.

> 지금 연구 중인 프로젝트는 Batch 전에 전부 standard normalization을 거치고 시작한다. BN을 활용해 볼 수 있겠다.

3. Experiment

- 데이터셋:

- 6개의 표 형식(tabular) 데이터셋을 사용하였으며, 각 데이터셋은 10,000개의 훈련 샘플로 구성된다.

- 데이터셋은 특정 특징들이 출력에 결정적인 역할을 하도록 설계되었다.

- 일반적인 특징 선택:

- Syn1-Syn3 데이터셋에서는 중요한 특징이 모든 인스턴스에 대해 동일하다. 예를 들어, Syn2는 특징 X3-X6에 의존한다.

- 이러한 경우, 전역(feature-wise) 특징 선택(global feature selection) 방식이 높은 성능을 보인다.

- 인스턴스 의존적 특징 선택:

- Syn4-Syn6 데이터셋에서는 중요한 특징이 인스턴스에 따라 달라진다. 예를 들어, Syn4에서는 출력이 X1-X2 또는 X3-X6에 의존하며, 이는 X11의 값에 따라 달라진다.

- 이 경우, 전역 특징 선택은 비효율적이며, 인스턴스별 특징 선택이 필요하다.

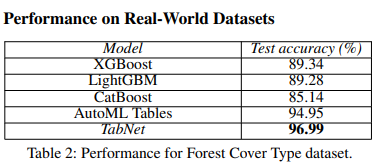

성능 비교

- TabNet의 성능:

- TabNet은 다음과 같은 방법들과 비교된다:

- Tree Ensembles (Geurts et al. 2006)

- LASSO Regularization

- L2X (Chen et al. 2018)

- INVASE (Yoon et al. 2019)

- TabNet은 Syn1-Syn3 데이터셋에서 전역 특징 선택에 근접한 성능을 보인다. 이는 TabNet이 글로벌 중요 특징을 효과적으로 식별할 수 있음을 의미한다.

- Syn4-Syn6 데이터셋에서는 인스턴스별로 중복된 특징을 제거함으로써 전역 특징 선택의 성능을 개선한다.

- TabNet은 단일 아키텍처로, Syn1-Syn3의 경우 26,000개의 파라미터를 가지며, Syn4-Syn6의 경우 31,000개의 파라미터를 가진다. 반면, 다른 방법들은 43,000개의 파라미터를 사용하는 예측 모델을 사용하며, INVASE는 101,000개의 파라미터를 가진다(액터-비평가 프레임워크의 두 모델 포함).

- TabNet은 다음과 같은 방법들과 비교된다:

4. TabNet Pytorch 실습 및 의의

TabNet은 한때 Kaggle에서 많이 사용됐던 모델이다.

실제 TabNet을 Classification 문제에서 사용하는 예시를 pytorch 코드를 통해 보여주겠다.

4-1. 주요 라이브러리를 Import 하고, titanic 데이터를 불러와서 데이터 전처리를 가볍게 해 준다.

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler, LabelEncoder

from sklearn.metrics import accuracy_score

from pytorch_tabnet.tab_model import TabNetClassifier

# 데이터 다운로드

url_train = 'https://raw.githubusercontent.com/datasciencedojo/datasets/master/titanic.csv'

df = pd.read_csv(url_train)

# 데이터 전처리

df['Age'].fillna(df['Age'].median(), inplace=True)

df['Embarked'].fillna(df['Embarked'].mode()[0], inplace=True)

df['Fare'].fillna(df['Fare'].median(), inplace=True)

df['Sex'] = LabelEncoder().fit_transform(df['Sex'])

df['Embarked'] = LabelEncoder().fit_transform(df['Embarked'])

4-2. 데이터를 X(input), Y(target)로 나눈다. 모델이 예측하는 것은 이 사람의 능력을 바탕으로 생존했냐 생존하지 않았냐, 즉 생존여부이다. 데이터 분할 후에, 모든 input 데이터에 대해서 scale을 맞춰주기 위해 표준화를 진행한다.

# 특징과 레이블 분리

X = df[['Pclass', 'Sex', 'Age', 'SibSp', 'Parch', 'Fare', 'Embarked']].values

y = df['Survived'].values

# 데이터 분할

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=0)

# 데이터 표준화

scaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

X_test = scaler.transform(X_test)

4-3. TabNet 하이퍼파라미터를 설정하고 학습 및 평가한다.

# TabNet 모델 생성

clf = TabNetClassifier(

optimizer_fn=torch.optim.Adam,

optimizer_params=dict(lr=2e-2),

scheduler_fn=torch.optim.lr_scheduler.StepLR,

scheduler_params=dict(step_size=10, gamma=0.9),

mask_type='sparsemax', # 'sparsemax' or 'entmax'

)

# 모델 학습

clf.fit(

X_train, y_train,

eval_set=[(X_test, y_test)],

batch_size=1024,

virtual_batch_size=128,

num_workers=0,

max_epochs=100,

patience=10,

)

# 예측 및 평가

y_pred = clf.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print(f"Accuracy: {accuracy:.4f}")

Accuracy: 0.9200

Attention 기법을 사용한다고 했지만, 사실 최근 우리가 알고 있는 Attention 기법이 사용된 것은 아니다. 저 당시에 Attention의 의미가 조금 혼동되어 사용된 것이 아닌가 생각된다.

머신러닝(xgboost)으로 VAEP를 뽑았을 때 잘 되었는데, 최근 DNNs로 했을 때는 제대로 나오지 않았다. 이에 대한 대안을 동료 연구생이 찾다가 발표했다고 하였다.

교수님께선 Ghost Normalization을 해결책으로 썼던 연유가 궁금하며 어쩌면 저런 아이디어가 나중에 연구할 때 좋은 보탬이 될 것이라고 하셨다.

'🧪 Data Science > Paper review' 카테고리의 다른 글

| [Paper review] SAC: Soft Actor Critic (0) | 2025.04.18 |

|---|---|

| [논문리뷰] Sample-Efficient Multi-agent RL with Reset Replay (5) | 2024.11.11 |

| [Paper review] Towards maximizing expected possession outcome in soccer (0) | 2024.07.03 |

| [논문 리뷰] 딥러닝으로 혐오 표현 감지하기 in Tweets (0) | 2022.04.06 |

| [논문 리뷰] Attention Mechanism 기법, NLP (0) | 2022.04.03 |